Community | Installation | Documentation | Examples | Paper | Citation | Contributing | CAMEL-AI

🐫 CAMEL is an open-source community dedicated to finding the scaling laws of agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

Join us (Discord, WeChat or Slack) in pushing the boundaries of finding the scaling laws of agents.

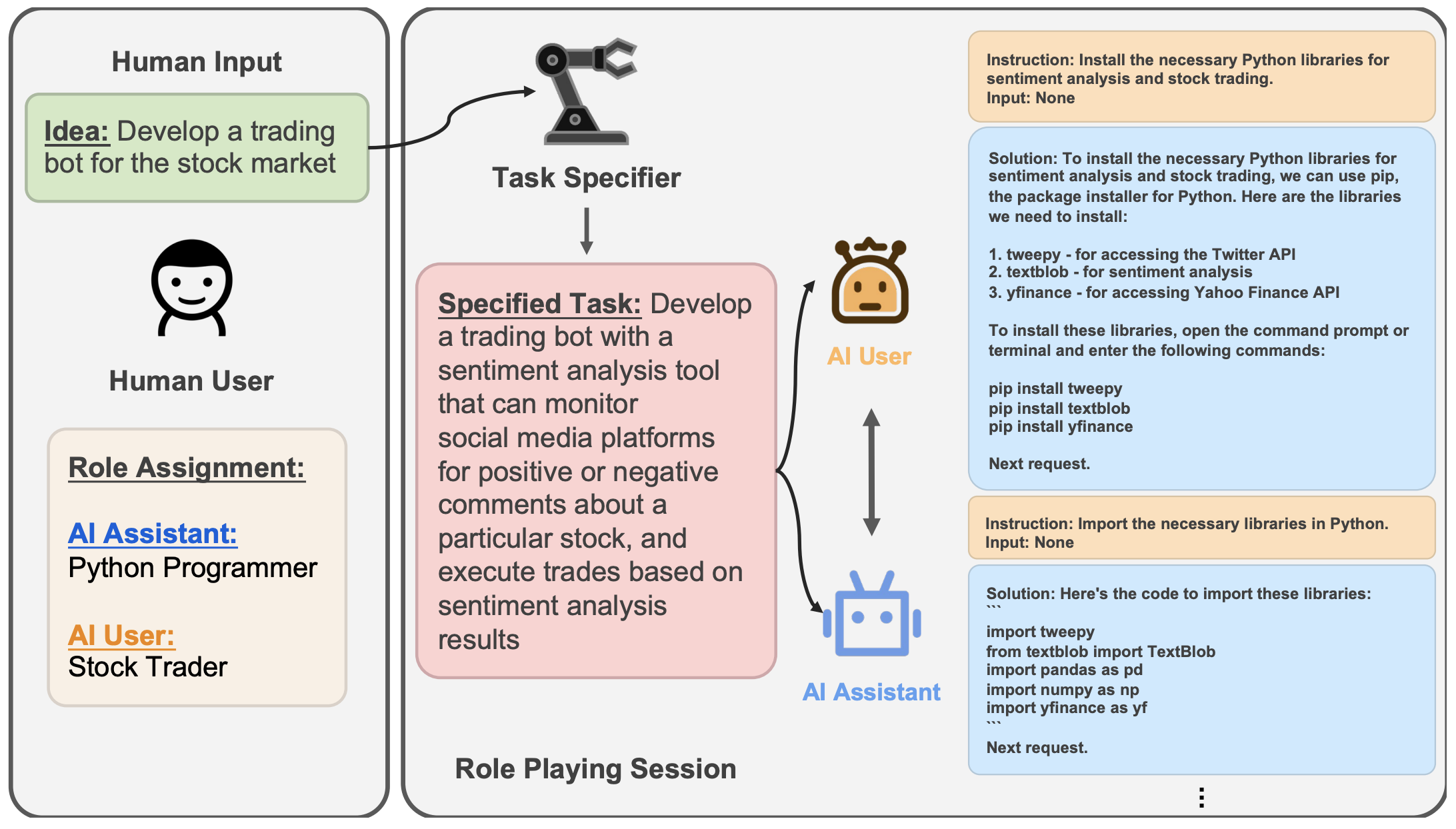

We provide a demo showcasing a conversation between two ChatGPT agents playing roles as a python programmer and a stock trader collaborating on developing a trading bot for stock market.

To install the base CAMEL library:

pip install camel-aiSome features require extra dependencies:

- To install with all dependencies:

pip install 'camel-ai[all]' - To use the HuggingFace agents:

pip install 'camel-ai[huggingface-agent]' - To enable RAG or use agent memory:

pip install 'camel-ai[tools]'

Install CAMEL from source with poetry (Recommended):

# Make sure your python version is later than 3.10

# You can use pyenv to manage multiple python versions in your system

# Clone github repo

git clone https://github.com/camel-ai/camel.git

# Change directory into project directory

cd camel

# If you didn't install poetry before

pip install poetry # (Optional)

# We suggest using python 3.10

poetry env use python3.10 # (Optional)

# Activate CAMEL virtual environment

poetry shell

# Install the base CAMEL library

# It takes about 90 seconds

poetry install

# Install CAMEL with all dependencies

poetry install -E all # (Optional)

# Exit the virtual environment

exitTip

If you encounter errors when running poetry install, it may be due to a cache-related problem. You can try running:

poetry install --no-cacheInstall CAMEL from source with conda and pip:

# Create a conda virtual environment

conda create --name camel python=3.10

# Activate CAMEL conda environment

conda activate camel

# Clone github repo

git clone -b v0.2.3 https://github.com/camel-ai/camel.git

# Change directory into project directory

cd camel

# Install CAMEL from source

pip install -e .

# Or if you want to use all other extra packages

pip install -e .[all] # (Optional)Detailed guidance can be find here

CAMEL package documentation pages.

You can find a list of tasks for different sets of assistant and user role pairs here.

As an example, to run the role_playing.py script:

First, you need to add your OpenAI API key to system environment variables. The method to do this depends on your operating system and the shell you're using.

For Bash shell (Linux, macOS, Git Bash on Windows):

# Export your OpenAI API key

export OPENAI_API_KEY=<insert your OpenAI API key>

OPENAI_API_BASE_URL=<inert your OpenAI API BASE URL> #(Should you utilize an OpenAI proxy service, kindly specify this)For Windows Command Prompt:

REM export your OpenAI API key

set OPENAI_API_KEY=<insert your OpenAI API key>

set OPENAI_API_BASE_URL=<inert your OpenAI API BASE URL> #(Should you utilize an OpenAI proxy service, kindly specify this)For Windows PowerShell:

# Export your OpenAI API key

$env:OPENAI_API_KEY="<insert your OpenAI API key>"

$env:OPENAI_API_BASE_URL="<inert your OpenAI API BASE URL>" #(Should you utilize an OpenAI proxy service, kindly specify this)Replace <insert your OpenAI API key> with your actual OpenAI API key in each case. Make sure there are no spaces around the = sign.

After setting the OpenAI API key, you can run the script:

# You can change the role pair and initial prompt in role_playing.py

python examples/ai_society/role_playing.pyPlease note that the environment variable is session-specific. If you open a new terminal window or tab, you will need to set the API key again in that new session.

- Download Ollama.

- After setting up Ollama, pull the Llama3 model by typing the following command into the terminal:

ollama pull llama3

- Run the script. Enjoy your Llama3 model, enhanced by CAMEL's excellent agents.

from camel.agents import ChatAgent from camel.messages import BaseMessage from camel.models import ModelFactory from camel.types import ModelPlatformType ollama_model = ModelFactory.create( model_platform=ModelPlatformType.OLLAMA, model_type="llama3", model_config_dict={"temperature": 0.4}, ) assistant_sys_msg = BaseMessage.make_assistant_message( role_name="Assistant", content="You are a helpful assistant.", ) agent = ChatAgent(assistant_sys_msg, model=ollama_model, token_limit=4096) user_msg = BaseMessage.make_user_message( role_name="User", content="Say hi to CAMEL" ) assistant_response = agent.step(user_msg) print(assistant_response.msg.content)

- Install vLLM

- After setting up vLLM, start an OpenAI compatible server for example by

python -m vllm.entrypoints.openai.api_server --model microsoft/Phi-3-mini-4k-instruct --api-key vllm --dtype bfloat16

- Create and run following script (more details please refer to this example)

from camel.agents import ChatAgent from camel.messages import BaseMessage from camel.models import ModelFactory from camel.types import ModelPlatformType vllm_model = ModelFactory.create( model_platform=ModelPlatformType.VLLM, model_type="microsoft/Phi-3-mini-4k-instruct", url="http://localhost:8000/v1", model_config_dict={"temperature": 0.0}, api_key="vllm", ) assistant_sys_msg = BaseMessage.make_assistant_message( role_name="Assistant", content="You are a helpful assistant.", ) agent = ChatAgent(assistant_sys_msg, model=vllm_model, token_limit=4096) user_msg = BaseMessage.make_user_message( role_name="User", content="Say hi to CAMEL AI", ) assistant_response = agent.step(user_msg) print(assistant_response.msg.content)

| Dataset | Chat format | Instruction format | Chat format (translated) |

|---|---|---|---|

| AI Society | Chat format | Instruction format | Chat format (translated) |

| Code | Chat format | Instruction format | x |

| Math | Chat format | x | x |

| Physics | Chat format | x | x |

| Chemistry | Chat format | x | x |

| Biology | Chat format | x | x |

| Dataset | Instructions | Tasks |

|---|---|---|

| AI Society | Instructions | Tasks |

| Code | Instructions | Tasks |

| Misalignment | Instructions | Tasks |

We implemented amazing research ideas from other works for you to build, compare and customize your agents. If you use any of these modules, please kindly cite the original works:

TaskCreationAgent,TaskPrioritizationAgentandBabyAGIfrom Nakajima et al.: Task-Driven Autonomous Agent. [Example]

- Released AI Society and Code dataset (April 2, 2023)

- Initial release of

CAMELpython library (March 21, 2023)

@inproceedings{li2023camel,

title={CAMEL: Communicative Agents for "Mind" Exploration of Large Language Model Society},

author={Li, Guohao and Hammoud, Hasan Abed Al Kader and Itani, Hani and Khizbullin, Dmitrii and Ghanem, Bernard},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}

Special thanks to Nomic AI for giving us extended access to their data set exploration tool (Atlas).

We would also like to thank Haya Hammoud for designing the initial logo of our project.

The source code is licensed under Apache 2.0.

We appreciate your interest in contributing to our open-source initiative. We provide a document of contributing guidelines which outlines the steps for contributing to CAMEL. Please refer to this guide to ensure smooth collaboration and successful contributions. 🤝🚀

For more information please contact camel.ai.team@gmail.com.